ChatGPT Under Attack for Its Water Use–10,000 Liters Per Day Impact

ChatGPT Under Attack for Its Water Use – 10,000 Liters Per Day Impact

In recent times, artificial intelligence (AI) has been a hot topic, and ChatGPT, developed by OpenAI, is at the forefront of these discussions. However, it’s not only its capabilities and potential that are being discussed but also its environmental impact. One of the surprising critiques involves the use of water in the training and operation of AI systems. This might sound confusing at first, especially considering that we often think of AI as a purely digital entity, but it’s crucial to understand the environmental consequences of such technology.

Understanding AI Training and Power Consumption

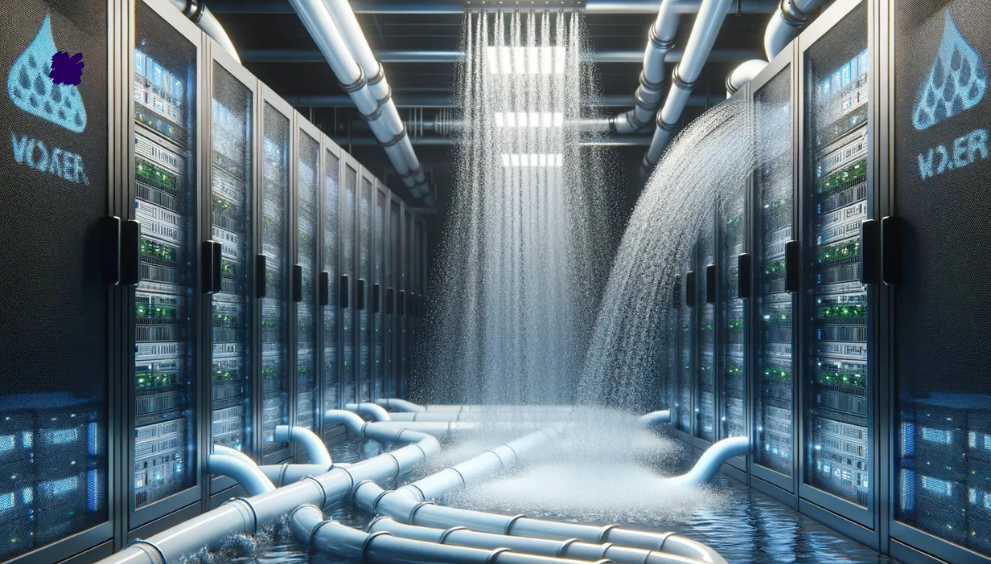

AI models like ChatGPT are incredibly resource-intensive. To understand how water fits into this picture, we need to look at the process behind AI training. When a model like ChatGPT is being developed, vast amounts of computational power are required to process data, perform complex calculations, and adjust the model’s parameters. This involves powerful data centers running thousands of servers 24/7.

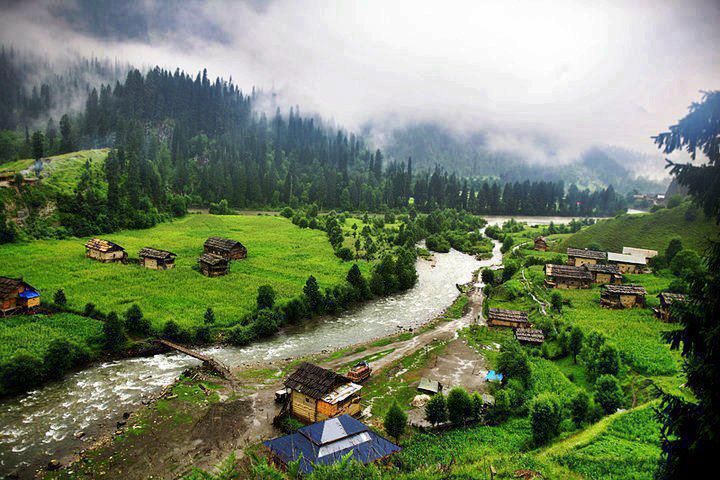

Now, data centers, in addition to requiring electricity to power the servers, also need cooling systems to prevent overheating. These cooling systems are often water-based, meaning they use water to regulate the temperature of these massive machines. Depending on the design, water cooling can use large amounts of water, either by circulating it through the system directly or evaporating it to cool the air.

While this cooling method is efficient, it’s not without environmental costs. The need for large quantities of water, especially in regions where water resources are already strained, raises concerns about the sustainability of such systems. The environmental footprint of AI, in particular, is often underestimated by the general public, as most people focus on the digital or carbon aspects of AI technology without considering the hidden water consumption.

Water Usage in Data Centers

Data centers are the backbone of cloud computing and AI operations, but they come at a cost. Cooling systems in these centers can use millions of liters of water daily, which is a significant issue in water-scarce areas. For example, in hot climates, data centers require water for evaporative cooling, which can cause a considerable depletion of local water resources. Furthermore, in areas where water is drawn from freshwater sources, this can exacerbate water shortages, affecting both local ecosystems and communities.

The situation becomes even more concerning when we consider that AI is increasingly being used for commercial purposes. As demand for AI services like ChatGPT continues to rise, so does the energy and water consumption required to support these systems.

The Bigger Picture: Environmental Impact

The environmental impact of AI isn’t just about water; it’s also about energy use and carbon emissions. Training large models requires enormous energy consumption, much of which comes from non-renewable sources. While AI companies like OpenAI are taking steps to reduce their carbon footprints, such as using renewable energy and improving energy efficiency, the environmental consequences remain significant.

The criticism around water usage is a part of a broader conversation about the sustainability of AI. Experts are increasingly questioning whether the rapid growth of AI is worth the environmental toll it takes, especially when it comes to water consumption in already overburdened regions.

Are There Alternatives?

Given the concerns about water consumption and the environmental footprint of AI, researchers and engineers are exploring alternative cooling technologies. These alternatives include liquid cooling, where coolants other than water are used, and even air cooling, which uses less water but requires more energy. Furthermore, more efficient models and algorithms can reduce the need for extensive training and computational power, ultimately lowering the amount of energy and water consumed.

AI companies are also looking into relocating their data centers to cooler climates where water usage can be minimized, or they are building data centers near renewable energy sources to reduce the carbon impact. These solutions are still in development, and it may take time before they become standard practice.

Conclusion

While the issue of water usage in AI training is a valid concern, it’s part of a much broader conversation about the sustainability of modern technology. As AI continues to develop and expand, it’s essential that the environmental costs, including water consumption, are considered and addressed. Companies like OpenAI, along with the broader tech community, must find ways to balance innovation with environmental responsibility.Read more informative blogs

English

English